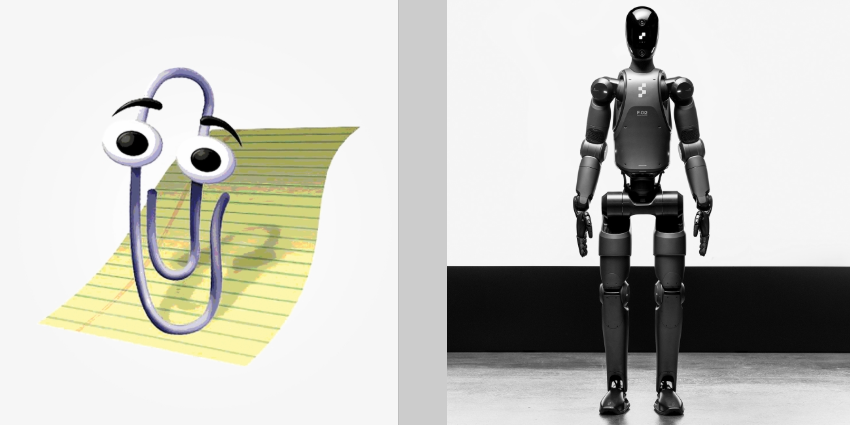

Remember Clippy (officially named Clippit), that overeager animated paperclip who popped up uninvited in Microsoft Office with his big googly eyes and questionable advice?

Tap, tap. “It looks like you’re writing a letter. Would you like help?” Umm – I’m OK thanks.

Fast forward to today, and we’re having philosophical debates with Claude, coaching ChatGPT through coding challenges, and watching as AI assistants diagnose medical conditions, write poetry, and generate photorealistic images from text. This is in less than 30 years – we’ve come a long old way.

The pace of change is mind-boggling.

Clippy lingered for a decade before being retired, while today’s AI models seem to leapfrog each other every few months. Are we witnessing an exponential growth curve? Consider this: it took 25 years to go from Clippy to ChatGPT, but just 18 months to go from ChatGPT to AI systems that can reason through complex problems, generate code, and even control computers.

Where might we be in another decade? Will AI assistants become indistinguishable from human colleagues? Will they manage our schedules, write our emails, draft our presentations, and anticipate our needs before we’re even aware of them? One thing’s for certain; the digital assistants of tomorrow will make today’s AI look as quaint as Clippy does now.

Let’s trace this remarkable journey from animated office supplies to sophisticated AI partners:

The Timeline of AI Interfaces

The Clippy Era (1996-2007)

1996-1997: Microsoft Clippy Debuts

- Microsoft Office 97 (1996): Introduces “Office Assistant” featuring Clippit (AKA Clippy), an animated paperclip using Bayesian algorithms to predict user needs with technology derived from Microsoft Bob

Despite innovation, Clippy became notorious for interruptions and was turned off by default in Office XP (2001), and was completely removed in Office 2007. However, it but left important lessons about AI interface design.

Clippy was one of the first mainstream attempts to create an interactive AI helper, establishing both possibilities and pitfalls for future developments

Early Voice Assistants (2011-2015)

2011-2014: Voice Takes Center Stage

- Apple’s Siri (2011): The first mainstream mobile voice assistant was initially named “Assistant” before choosing “Siri” for being short and easy to pronounce

- Amazon Alexa (2014): Launched with Echo, bringing voice assistants into homes

- Google Now (2012) and Microsoft Cortana (2014) expand the ecosystem

- IBM Watson (2011) demonstrates AI potential by winning Jeopardy! – an important milestone in AI’s public visibility]

This period established voice as the primary interface for AI, though capabilities remained limited to simple commands and basic information retrieval

The Deep Learning Revolution (2015-2019)

2016-2017: AI Foundations Strengthen

- Google DeepMind’s AlphaGo defeats world champion Go player Lee Sedol (2016)

Voice assistants become more conversational with improved language understanding, and integration with smart home devices greatly expands.

The underlying AI models become more sophisticated, although still limited in contextual understanding and complex reasoning.

2018-2019: Language Models Transform Interfaces

- BERT (2018): Google’s model revolutionizes natural language processing

- GPT-2 (2019): OpenAI demonstrates impressive text generation showcasing advanced natural language processing

Early large language models showed improvements in understanding context and generating human-like responses.

The groundwork was laid for a new generation of more capable AI assistants that could maintain conversation context and generate more natural language.

The Generative AI Era (2020-Present)

2020-2022: Language Models Accelerate

- GPT-3 (2020): OpenAI’s 175-billion parameter model shows unprecedented text generation capabilities marking a significant advancement

- DALL-E (2021): OpenAI introduces text-to-image generation, expanding AI into visual creativity

- Anthropic Founded (2021): Focusing on AI safety and developing reliable, interpretable systems

Traditional assistants begin falling behind newer AI technologies in terms of understanding and generating natural language and AI interfaces begin shifting from single-turn commands to multi-turn conversations with memory.

2021-2022: The Image Generation Evolution

- DALL-E (January 2021): OpenAI introduces its first text-to-image generation model, capable of creating images from text descriptions

- Midjourney (March 2022) and Stable Diffusion (August 2022) launch, democratizing AI image creation and triggering an explosion of AI art

- DALL-E 2 (April 2022): OpenAI’s improved model brings photorealistic image generation to the masses

These tools greatly enhance creative possibilities; allowing anyone to generate visual content from text prompts and sparking new discussions about art, copyright, and creativity.

Late 2022-2023: Conversational AI Competition

- ChatGPT (November 2022): Acquires over 1 million users in just 5 days becoming one of the fastest-growing consumer applications in history

- Claude (March 2023): Anthropic releases its AI assistant in two versions: the full-featured Claude and lighter Claude Instant

- GPT-4 (March 2023): OpenAI adds multimodal capabilities for processing both text and images

- Claude 2 (July 2023): Features an expanded context window to 100,000 tokens (about 75,000 words)

- Beyond Software to Embodied AI (2023-2024): While AI assistants evolved in the digital realm, companies like Tesla, Figure AI, and others began developing humanoid robots designed to bring AI capabilities into the physical world, with Tesla’s Optimus robot demonstrating tasks like sorting objects and navigating factory environments.

Microsoft’s Copilot: Integrates LLMs into Office and Windows moving away from Clippy’s failures to sophisticated AI assistance and Google’s Gemini/Bard: Google responds with its own AI solutions.

The focus shifts from command-based interfaces to collaborative AI partners that can understand nuanced requests.

2024-2025: AI Video Generation Arrives

- OpenAI’s Sora (February 2024): Unveiled as a text-to-video AI capable of generating realistic videos up to one minute long

- Sora Public Release (December 2024): Made available to the public, allowing users to create high-definition videos up to 20 seconds long with various resolutions and aspect ratios

- Competing Models: Rise of alternatives like Runway’s Gen-3, Kuaishou’s Kling, and open-source models like Open-Sora

These technologies are another big leap in generative AI; blurring the boundary between real and generated content and opening new possibilities for filmmaking, marketing, and entertainment.

2023-2024: Beyond Software to Embodied AI

While AI assistants evolved in the digital realm, companies like Tesla, Figure AI, and others began developing humanoid robots designed to bring AI capabilities into the physical world, with Tesla’s Optimus robot demonstrating tasks like sorting objects and navigating factory environments and Figure’s humanoid’s finding employment in BMW’s factories.

Figure’s robots aren’t just impressive – they’re useful too

2024-2025: Advanced AI Assistants Emerge

- Claude 3 Family (March 2024): Three models including Haiku (for speed), Sonnet (balanced), and Opus (complex reasoning)

- Claude 3.5 Sonnet (June 2024): Introduces Artifacts feature for generating and interacting with code snippets

- OpenAI o1 (September 2024): Enhanced reasoning for complex problems in research, coding, math, and science

- Claude Computer Use (October 2024): Enables Claude to interact with a computer’s desktop environment, navigating interfaces and performing tasks

- ChatGPT Tasks (January 2025): Adds time-based functions like reminders and notifications

- Claude 3.7 Sonnet (February 2025): A “hybrid reasoning” model responding quickly to simple queries while taking more time for complex problems

- Claude Code (February 2025): An agentic command line tool for coding tasks

AI interfaces like OpenAI’s ‘Deep Research’ gain the ability to break down complex problems step-by-step, showing their reasoning process.

Specialized AI assistants emerge for specific domains like healthcare, education, and scientific research, and agentic AI starts being integrated into workplaces to do ‘human’ tasks.

AI Today and AI Tomorrow

Today’s AI interfaces feature advanced reasoning capabilities, multimodal interaction (text, images, audio), and personalized experiences. The distinction between “assistants” and “chatbots” is becoming blurred as systems like Claude and ChatGPT incorporate features from both paradigms.

AI assistants have evolved from simple task-oriented helpers to sophisticated reasoning partners capable of complex problem-solving, creative content generation, and extended contextual conversations. They combine the approachability of earlier assistants with vastly improved capabilities for understanding and responding to user needs.

Challenges include privacy concerns as AI assistants handle more personal data, the development of appropriate global regulations, and maintaining user trust as capabilities increase. The technological race continues among OpenAI, Anthropic, Google, Meta and others, each pushing boundaries in different directions.

Future AI interfaces will likely feature more agentic capabilities (taking autonomous actions on behalf of users), stronger local processing (reducing cloud dependence), and more sophisticated personalization based on individual needs and preferences. AI safety remains crucial, with companies like Anthropic specifically working to ensure systems are “reliable, interpretable, and steerable” as capabilities advance.

In less than three decades, we’ve witnessed an extraordinary evolution from Clippy’s simple rule-based assistance to today’s sophisticated AI systems capable of understanding complex context, generating creative content, and reasoning through difficult problems – with development accelerating dramatically in just the past five years.

It’s not hyperbole to say this has been one of the most dramatic technological evolutions in modern history; changing how we interact with computers and setting the stage for AI to become an increasingly integral part of our daily lives.