Baby boomers might remember the PBS TV series Cosmos, in which famed astronomer Carl Sagan explained the mysteries of the universe. Now, NVIDIA has embarked on a more terrestrial—but equally impressive—journey to a new type of Cosmos.

Founder and CEO Jensen Huang held court with a 90-minute keynote on the first day of this year’s CES consumer electronics show in Las Vegas. I wrote an overview of the long list of new and upgraded products Huang unveiled. This article looks at two of the company’s announcements: the Cosmos generative world foundation models platform and the addition of generative AI capabilities to the NVIDIA Omniverse developer platform.

What is Cosmos—and What Does it Do?

NVIDIA describes Cosmos as “a platform comprising state-of-the-art generative world foundation models, advanced tokenizers, guardrails, and an accelerated video processing pipeline built to advance the development of physical AI systems such as autonomous vehicles (AVs) and robots.” Sounds interesting. Now, let’s examine what’s behind it and how it works.

Let’s start with “world foundation models.” These neural networks simulate real-world environments and predict accurate outcomes based on text, images, or video input. They are valuable for training and testing physical AI systems such as robots and autonomous vehicles. In non-technical terms, these test tracks ensure AI-driven software performs as intended before it’s released into the real world.

Tokenizers are bite-sized pieces of information necessary for natural language processing and machine learning. They convert text into smaller parts, such as tokens ranging from single characters to complete words. This process enables machines to understand human language by breaking it into smaller pieces that are easier to analyze. It’s akin to how parents teach babies to talk by repeating sounds and small words to help develop language skills.

As the name suggests, guardrails help large language models (LLM) stay on course and produce only the intended outputs of the specified task. Accelerated video processing enables developers to “process and annotate tens of millions of hours of video in days vs. years on CPUs alone,” said Rev Lebaredian, vice president of omniverse and simulation technology at NVIDIA, in an analyst briefing before Huang’s keynote. As for what Cosmos does, Lebaredian summed it up this way: “Cosmos will dramatically accelerate the time to train intelligent robots and advanced self-driving cars.”

The simplest explanation of what Cosmos is, came from Huang during his keynote, saying it would do for physical AI what GPT did for generative AI. On a related note, during his presentation, Huang stated that he believes physical AI, such as robots and autonomous vehicles, is the next phase of the AI revolution after agentic and generative.

How Developers Will Use Cosmos

With the capabilities described above, how can developers use Cosmos? Once they process their data using the Cosmos data pipeline, they can use Cosmos to search, index, and fine-tune data sets. Lebaredian said NVIDIA’s AV partners are already using it to find specific data, such as wildlife or emergency vehicles, to train autonomous vehicles. This process, called reinforcement, “uses AV feedback to test, validate, or benchmark how their AI models perform different tasks,” he said.

It’s all part of NVIDIA’s focus on creating a wide range of tools to make AI practical and valuable for various applications. All elements are openly available on Hugging Face, the online AI community, and NVIDIA NGC, the company’s portal for enterprise services, software, management tools, and support for end-to-end AI and digital twin workflows.

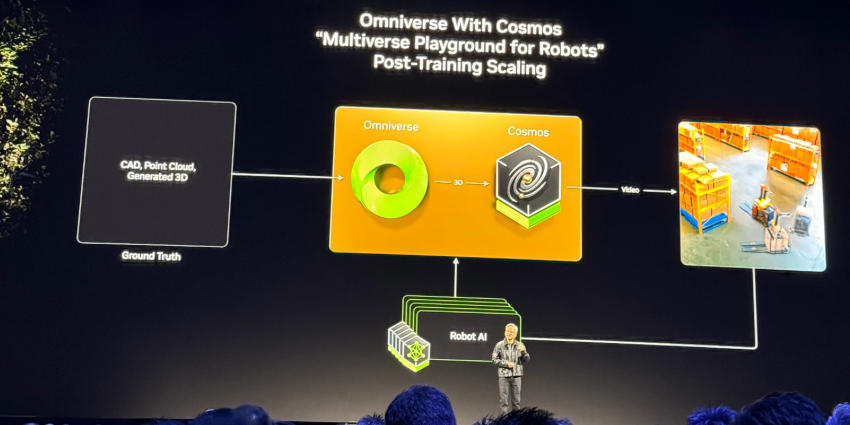

The Powerful Combination of Cosmos and Omniverse

NVIDIA designed Cosmos to work closely with Omniverse, the company’s operating system for building 3D physics, grounded digital twins, and virtual worlds, according to Lebaredian. The company announced the addition of generative physical AI to Omniverse. Now, developers can integrate OpenUSD, NVIDIA RTX rendering technologies, and generative physical AI into existing software tools and simulation workflows. Key applications include robotics, autonomous vehicles, and vision AI. Global organizations are using Omniverse to develop new products and services designed to accelerate the next era of industrial AI.

In its Omniverse announcement at CES, NVIDIA CEO Jensen Huang said physical AI will revolutionize the $50 trillion manufacturing and logistics industries.

“Everything that moves—from cars and trucks to factories and warehouses—will be robotic and embodied by AI. He added that the Omniverse digital twin operating system and Cosmos physical AI are “the foundational libraries for digitalizing the world’s physical industries.”

In an announcement at CES, NVIDIA said that combining Omniverse and the Cosmos world foundation models “creates a synthetic data multiplication engine—letting developers easily generate massive amounts of controllable, photoreal synthetic data. Developers can compose 3D scenarios in Omniverse and render images or videos as outputs. These can then be used with text prompts to condition Cosmos models to generate countless synthetic virtual environments for physical AI training.”

In his CES keynote, Huang announced four new blueprints that make it “easier for developers to build Universal Scene Description (OpenUSD)-based Omniverse digital twins for physical AI.” The blueprints include:

- Mega, powered by Omniverse Sensor RTX APIs, is used to develop and test robot fleets at scale in an industrial factory or warehouse digital twin before deployment in real-world facilities.

- Autonomous vehicle simulation, also powered by Omniverse Sensor RTX APIs, lets AV developers replay driving data, generate new ground-truth data, and perform closed-loop testing to accelerate their development pipelines.

- Omniverse spatial streaming to Apple Vision Pro helps developers create applications for immersive streaming of large-scale industrial digital twins to Apple’s mixed-reality headsets.

- Real-time digital twins for computer-aided engineering are reference workflows built on NVIDIA CUDA-X acceleration, physics AI, and Omniverse libraries that enable real-time physics visualisation.

These new and expanded products and NVIDIA’s other CES announcements demonstrate the company’s ongoing commitment to delivering powerful AI innovations.